- AI Nuggetz

- Posts

- AI Is Already Changing Healthcare and Biotech—Here’s Proof

AI Is Already Changing Healthcare and Biotech—Here’s Proof

From brain implants to hospital assistants—AI in healthcare is real now

1. Brain-to-Voice: AI Turns Thoughts Into Speech

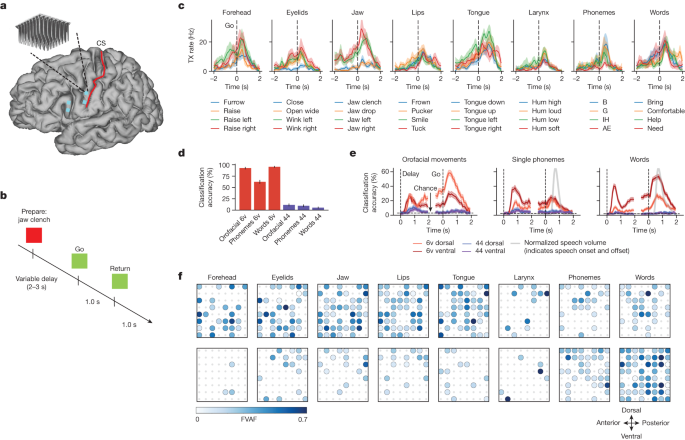

UCSF and Stanford developed a neuroprosthesis that helps people who can’t speak due to stroke or paralysis communicate again—using only their brain activity.

It uses a small wireless brain implant to capture electrical signals from the part of the brain responsible for speech.

Those signals are decoded by a custom-trained AI model that maps them to words, phrases, and even tone.

The system then outputs full, natural-sounding sentences in real-time through a digital avatar that mimics the patient’s facial expressions.

This isn’t a lab demo—it’s been tested on real patients, including a woman with ALS who hadn't spoken in years. The tech translated her brain activity into more than 60 words per minute with impressive accuracy.

What to Learn:

This is a clear example of AI helping restore lost human abilities. It combines neuroscience, AI modeling, and assistive communication—a powerful direction for healthcare and biotech innovation.

This is one of the first working examples of AI bridging biology and language.

2. XTrimoPGLM: The Protein Decoder from Harvard & Tencent

This new AI model, XTrimoPGLM, predicts the 3D structures of proteins from their amino acid sequences faster and more accurately than traditional methods.

How it works: Proteins are made of chains of amino acids, and the sequence of these amino acids determines how the protein will fold into its 3D shape. XTrimoPGLM takes a raw sequence of amino acids and predicts its final folded structure using deep learning algorithms.

Why it’s important: Understanding protein structures is crucial for drug discovery, designing vaccines, and modeling rare diseases—because proteins are the key to how cells function.

Its speed: Traditional protein modeling can take weeks or months, while this AI model dramatically shortens the time needed to predict protein structures, potentially saving years in the drug development process.

What to Learn:

XTrimoPGLM is a multi-modal model, meaning it integrates multiple types of data to make predictions. It combines genomic sequences (the genetic code for proteins) with structural data (how proteins fold and interact).

Think of it like ChatGPT for proteins—just like GPT-4 processes language, XTrimoPGLM processes amino acid sequences to predict complex structures.

3. Seattle Children’s Hospital Uses AI in Daily Clinical Work

Seattle Children’s is one of the first pediatric hospitals in the U.S. to integrate generative AI directly into clinical workflows—and they’re doing it in a smart, safe way.

They’ve enhanced their long-standing Clinical Standard Work (CSW) system with a generative AI assistant built on Google Cloud infrastructure. CSW is a structured, evidence-based approach that guides healthcare providers step-by-step through diagnosis and treatment plans. It’s been used at Seattle Children’s since 2010 and adopted in over 40 hospitals.

Here’s what the new AI assistant does:

Delivers real-time prompts and care guidance based on CSW pathways

Flags safety considerations and critical next steps for providers

Helps reduce clinical variation by standardizing best practices

Saves time by surfacing relevant information without digging through records or documentation

The assistant is powered by a fine-tuned large language model (LLM) trained on Seattle Children’s proprietary care guidelines. It runs in a HIPAA-compliant environment, is fully auditable, and doesn’t make decisions—it simply supports clinical teams with context-aware suggestions.

What to Learn:

This is a model example of responsible AI in healthcare—not replacing doctors, but augmenting their decision-making in a structured, tested framework. It’s assistive, not autonomous, and it’s designed to be safe, explainable, and embedded in real workflows.

4. How Google’s AI Tools Are Powering Real-World Use

At Google Cloud Next 2024, one theme was clear: AI isn’t just experimental—it’s driving real business and government outcomes at scale.

Here’s how it’s already being used in production:

McDonald’s is using Gemini (Google’s large language model) to streamline operations and automate internal workflows.

Nevada’s Department of Employment built a system using BigQuery and Gemini to process unemployment claim appeals—cutting resolution times by 75%.

Kraft Heinz is creating full marketing campaigns using generative AI—copy, images, and variations across channels.

Lowe’s uses Vertex AI Search to power product recommendations in real-time, improving customer experience and purchase conversion.

Mercado Libre is scaling personalized shopping with AI across Latin America.

On the creative side, Google and Adobe partnered to bring generative AI capabilities directly into Photoshop, Premiere, and other Adobe tools, making high-quality content creation faster and more accessible.

On the security side:

Google introduced GUS (Generative AI User Safety), a system designed to stop prompt injection attacks and data leaks from within LLMs.

They also acquired Wiz, one of the fastest-growing cloud security startups, to reinforce enterprise-grade AI safety and governance.

What to Learn:

AI adoption is happening now—across sectors, from marketing and logistics to government and retail. The key tools? Vertex AI, BigQuery, and Gemini. These systems are being used not by engineers—but by operations teams, marketers, and public agencies.

🧠 Nugget of the Week: What Is a Neural Network?

Neural networks are algorithms inspired by how your brain works.

They’re made of layers of “neurons” that pass information forward.

Each neuron adjusts its weight based on the data it sees—learning over time.

Most modern AI models (like GPT or image generators) are built on large, deep neural networks.

Why it matters:

Without neural networks, there’d be no GPT, no image generation, and no AI tools making healthcare smarter.

🧩 Save this nugget. We’ll build on it next week.